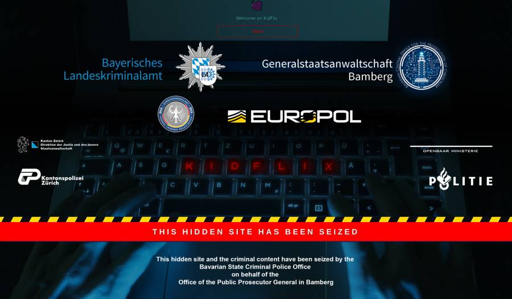

They also seized 72,000 illegal videos from the site and personal information of its users, resulting in arrests of 1,400 suspects around the world.

Wow

Does it feel odd to anyone else that a platform for something this universally condemned in any jurisdiction can operate for 4 years, with a catchy name clearly thought up by a marketing person, its own payment system and nearly six figure number of videos? I mean even if we assume that some of those 4 years were intentional to allow law enforcement to catch as many perpetrators as possible this feels too similar to fully legal operations in scope.

It’s a side effect of privacy and security. The one side effect they’re trying to use to undermine all of the privacy and security.

This has nothing to do with privacy! Criminals have their techniques and methods to protect themselves and their “businesses” from discovery, both in the real world and in the online world. Even in a complete absence of privacy they would find a way to hide their stuff from the police - at least for a while.

In the real world, criminals (e.g. drug dealers) also use cars, so you could argue, that druck trafficking is a side effect of people having cars…

This platform used Tor. And because we want to protect privacy, they can make use of it.

This particular platform used tor. It doesn’t mean all platforms are using privacy centric anonymous networks. There are incidents with people using kik, Snapchat, Facebook and other clear net services to perform criminal actions such as drugs or cp.

For anyone interested in the Kik controversy: There’s a great episode of the Darknet Diaries podcast about it: https://darknetdiaries.com/episode/93/

Illegal business can operate online for a long time if they have good OpSec. Anonymous payment systems are much easier these days because of cryptocurrencies.

Is that why Trump is so for them?

Yeah, more or less

It definitely seems weird how easy it is to stumble upon CP online, and how open people are about sharing it, with no effort made, in many instances, to hide what they’re doing. I’ve often wondered how much of the stuff is spread by pedo rings and how much is shared by cops trying to see how many people they can catch with it.

If you have stumbled on CP online in the last 10 years, you’re either really unlucky or trawling some dark waters. This ain’t 2006. The internet has largely been cleaned up.

I don’t know about that.

I spot most of it while looking for out-of-print books about growing orchids on the typical file-sharing networks. The term “blue orchid” seems to be frequently used in file names of things that are in no way related to gardening. The eMule network is especially bad.

When I was looking into messaging clients a couple years ago, to figure out what I wanted to use, I checked out a public user directory for the Tox messaging network and it was maybe 90% people openly trying to find, or offering, custom made CP. On the open internet, not an onion page or anything.

Then maybe last year, I joined openSUSE’s official Matrix channels, and some random person (who, to be clear, did not seem connected to the distro) invited me to join a room called openSUSE Child Porn, with a room logo that appeared to be an actual photo of a small girl being violated by a grown man.

I hope to god these are all cops, because I have no idea how there can be so many pedos just openly doing their thing without being caught.

typical file-sharing networks

Tox messaging network

Matrix channels

I would consider all of these to be trawling dark waters.

File-sharing and online chat seem like basic internet activities to me.

This ain’t the early 2000s. The unwashed masses have found the internet, and it has been cleaned for them. 97% of the internet has no idea what Matrix channels even are.

97% of the internet has no idea what Matrix channels even are.

I’ve been able to explain it to people pretty easily as “like Discord, but without Discord administration getting to control what’s allowed, only whoever happens to run that particular server.”

Search “AI woman porn miniskirt,” and tell me you don’t see questionable results in the first 2 pages, of women who at least appear possibly younger than 18. Because AI is so heavily corrupted with this content en masse, this has leaked over to Google searches in most porn categories being corrupted with AI seeds that can be anything.

Fuck, the head guy of Reddit, u/spez, was the main mod of r/jailbait before he changed the design of reddit so he could hide mod names. Also, look into the u/MaxwellHill / Ghilisaine Maxwell conspiracy on Reddit.

There are very weird, very large movements regarding illegal content (whether you intentionally search it or not) and blackmail and that’s all I will point out for now

Search “AI woman porn miniskirt,”

Did it with safesearch off and got a bunch of women clearly in their late teens or 20s. Plus, I don’t want to derail my main point but I think we should acknowledge the difference between a picture of a real child actively being harmed vs a 100% fake image. I didn’t find any AI CP, but even if I did, it’s in an entire different universe of morally bad.

r/jailbait

That was, what, fifteen years ago? It’s why I said “in the last decade”.

“Clearly in their late teens,” lol no. And since AI doesn’t have age, it’s possible that was seeded with the face of a 15yr old and that they really are 15 for all intents and purposes.

Obviously there’s a difference with AI porn vs real, that’s why I told you to search AI in the first place??? The convo isn’t about AI porn, but AI porn uses images to seed their new images including CSAM

It’s fucking AI, the face is actually like 3 days old because it is NOT A REAL PERSON’S FACE.

We aren’t even arguing about this, you giant creep who ALWAYS HAS TO GO TO BAT FOR THIS TOPIC REPEATEDLY.

It’s meant to LOOK LIKE a 14 yr old because it is SEEDED OFF 14 YR OLDS so it’s indeed CHILD PORN that is EASILY ACCESSED ON GOOGLE per the original commenter claim that people have to be going to dark places to see this - NO, it’s literally in nearly ALL AI TOP SEARCHES. And it indeed counts for LEGAL PURPOSES in MOST STATES as child porn even if drawn or created with AI. How many porn AI models look like Scarlett Johansson because they are SEEDED WITH VER FACE. Now imagine who the CHILD MODELS are seeding from

You’re one of the people I am talking about when I say Lemmy has a lot of creepy pedos on it FYI to all the readers, look at their history

was seeded with the face of a 15yr old and that they really are 15 for all intents and purposes.

That’s…not how AI image generation works? AI image generation isn’t just building a collage from random images in a database - the model doesn’t have a database of images within it at all - it just has a bunch of statistical weightings and net configuration that are essentially a statistical model for classifying images, being told to produce whatever inputs maximize an output resembling the prompt, starting from a seed. It’s not “seeded with an image of a 15 year old”, it’s seeded with white noise and basically asked to show how that white noise looks like (in this case) “woman porn miniskirt”, then repeat a few times until the resulting image is stable.

Unless you’re arguing that somewhere in the millions of images tagged “woman” being analyzed to build that statistical model is probably at least one person under 18, and that any image of “woman” generated by such a model is necessarily underage because the weightings were impacted however slightly by that image or images, in which case you could also argue that all drawn images of humans are underage because whoever drew it has probably seen a child at some point and therefore everything they draw is tainted by having been exposed to children ever.

Yes, it is seeded with kids’ faces, including specific children like someone’s classmate. And yes, those children, all of them, who it uses as a reference to make a generic kid face, are all being abused because it’s literally using their likeness to make CSAM. That’s quite obvious.

It would be different if the AI was seeding models from cartoons, but it’s from real images.

During the investigation, Europol’s analysts from the European Cybercrime Centre (EC3) provided intensive operational support to national authorities by analysing thousands of videos.

I don’t know how you can do this job and not get sick because looking away is not an option

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

Most emergency line operators and similar kinds of inspectors get them, so it would be odd if they did not.

Yes, my wife used to work in the ER, she still tells the same stories over and over again 15 years later, because the memories of the horrible shit she saw doesn’t go away

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

And this is for law enforcement level of personnel. Meta and friends just outsource content moderation to low-wage countries and let the poors deal with the PTSD themselves.

Let’s hope that’s what AI can help with, instead of techbrocracy

I’m sure many of them numb themselves to it, and pretend it isn’t real in order to do the job. Then unfortunately, I’m sure some of them get addicted themselves.

Similar to undercover cops who do drugs while undercover, then get addicted to the drugs.

And it didn’t even require sacrificing encryption huh!

“See we caught these guys without doing it, thank of how many more we can catch if we do! Like all the terrorists America has caught with violating their privacy. …Maybe some day they will.”

Fuck man. I used to use a program called “Kidpix” when I was a kid. It was like ms paint but with fun effects and sounds.

I used to love the dynamite tool!

1.8m users, how the hell did they ran that website for 3 years?

That’s unfortunately (not really sure) probably the fault of Germanys approach to that. It is usually not taking these websites down but try to find the guys behind it and seize them. The argument is: they will just use a backup and start a “KidFlix 2” or sth like that. Some investigations show, that this is not the case and deleting is very effective. Also the German approach completely ignores the victim side. They have to deal with old men masturbating to them getting raped online. Very disturbing…

Honestly, if the existing victims have to deal with a few more people masturbating to the existing video material and in exchange it leads to fewer future victims it might be worth the trade-off but it is certainly not an easy choice to make.

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I’m conflicted on that. Naturally, I’m disgusted, and repulsed. I AM NOT ADVOCATING IT.

But if no real child is harmed…

I don’t want to think about it, anymore.

Understand you’re not advocating for it, but I do take issue with the idea that AI CSAM will prevent children from being harmed. While it might satisfy some of them (at first, until the high from that wears off and they need progressively harder stuff), a lot of pedophiles are just straight up sadistic fucks and a real child being hurt is what gets them off. I think it’ll just make the “real” stuff even more valuable in their eyes.

I feel the same way. I’ve seen the argument that it’s analogous to violence in videogames, but it’s pretty disingenuous since people typically play videogames to have fun and for escapism, whereas with CSAM the person seeking it out is doing so in bad faith. A more apt comparison would be people who go out of their way to hurt animals.

A more apt comparison would be people who go out of their way to hurt animals.

Is it? That person is going out of their way to do actual violence. It feels like arguing someone watching a slasher movie is more likely to make them go commit murder is a much closer analogy to someone watching a cartoon of a child engaged in sexual activity or w/e being more likely to make them molest a real kid.

We could make it a video game about molesting kids and Postal or Hatred as our points of comparison if it would help. I’m sure someone somewhere has made such a game, and I’m absolutely sure you’d consider COD for “fun and escapism” and someone playing that sort of game is doing so “in bad faith” despite both playing a simulation of something that is definitely illegal and the core of the argument being that one causes the person to want to the illegal thing more and the other does not.

With everything going on right now, the fact that I still feel physically sick reading things like this tells me I haven’t gone completely numb yet. Just absolutely repulsive.